In 2020, PayPal cofounder Peter Thiel underlined two poles of technological innovation and conflict – one being bitcoin (and crypto), and the other being artificial intelligence. As with nuclear technology, there is a dual-use nature inherent in both crypto and AI.

Specifically, AI could “theoretically make it possible to centrally control an entire economy”, while crypto “holds out the prospect of a decentralised and individualised world”. In his thesis, Thiel draws a dichotomy between the two technologies, which are in an arms race for primacy in the 21st century.

If we say crypto is libertarian and that it is fundamentally a force for decentralisation, then I think we should also be willing to say that AI, especially in the low-tech surveillance form, is essentially communist.

Indeed, the crypto proposition in a nutshell is decoupling money from the state, in much the same spirit of how countries separated the Church from the state during the enlightenment – a turn of events that’s widely viewed as positive. Bitcoin is the pioneering technology in this regard. On the other hand, deep-learning AI algorithms are contingent on centralised databases controlled by a handful of people. ChatGPT’s meteoric rise is a testament to the positive attributes of powerful AI tools. But in some sense, the two are diametrically opposed.

ChatBots versus crypto

The last six months can be characterised as a journey of mainstream discovery of artificial intelligence. Image processors like Midjourney and text generators like ChatGPT have been at the forefront of immense public fascination and debate. The AI can interact in a conversational style, and can also provide human-like responses. The text-based chatbot also drafts prose, poetry and computer code on command when prompted. Unlike the empty promises of flying cars in the 70s and 80s, and self-driving cars more recently, these applications seem ready for commercial deployment in the world of bits.

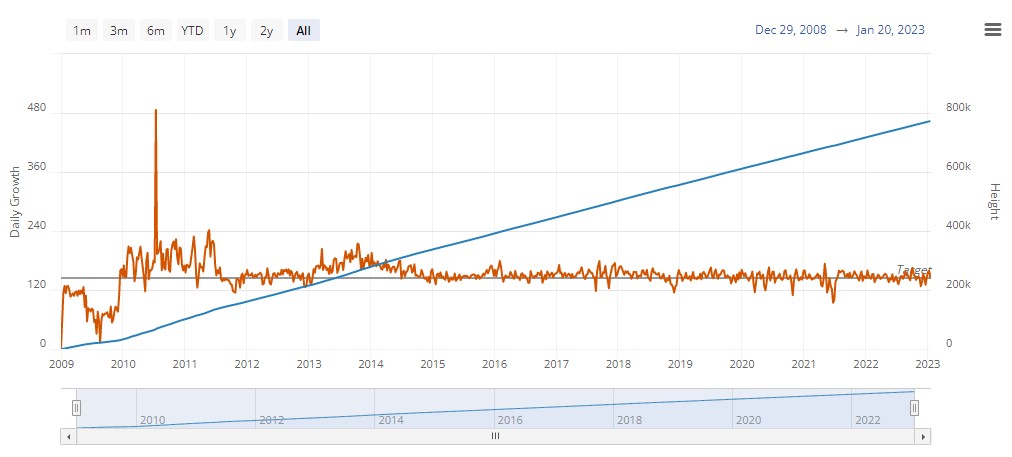

At a bare minimum, AI-based projects will pose as competition for investments and funding for as long as they capture people’s general attention and imagination. And while the litany of crypto-fraud was a consequence of centralised actors, there is an undeniable contagion effect of reputational damage for the crypto sector. This has no bearing on long-standing, high-integrity public blockchains like Bitcoin and Litecoin – which are still reliably producing blocks regardless of fiat-denominated prices.

Still, there is an opening for AI-based investments. And indeed, the diverse crypto space hasn’t wasted any time in jumping on the bandwagon, with various projects like Fetch and Ocean protocol riding the AI hype wave. But there are also deeper differences in both the structure and the underlying ethos of public cryptos and artificial intelligence.

In terms of structure, AI/ML algorithms rely on large databases for training, which have created the privacy and security threats to which crypto communities are opposed. The dependence on data caches paints a bullseye on the technology for capture by large centralised corporations. Admittedly, the divide is also present in proof-of-work (PoW) and proof-of-stake (PoS) blockchains, whereby PoS coins are vulnerable to regulatory capture and PoW-coins are highly resistant. Microsoft’s $10 billion investment into OpenAI and ChatGPT shows the tantalising allure of public-private capture. The tech giant’s CEO, Satya Nadella even told Davos attendees last week that the company plans to include OpenAI into every Microsoft product.

Technological pitfalls

Unsurprisingly, AI/ML technology is a double edged sword. A less forgiving, and perhaps accurate interpretation would be that OpenAI in the hands of mega corporations and rogue governments is a recipe for disaster. For instance, how much worse would the public dialogue around mRNA vaccines be had there been a system of automated censorship in place (instead of deliberate censorship via social media fact checkers and viewer throttling)?

The idea of public platforms automating and promoting certain narratives over others flies in the face of free speech and reasonable public debate, not to mention every constitution in the West. Left unchecked, there is a scenario whereby users are manipulated without even noticing, and in a way that does not happen in real life conversations. In other words, algorithmic automation can create alternative reality bubbles, whereby algorithms pick and choose what’s visible and what’s not, what’s trending, and what is true. The parameter inputs are still human, mind you, which is to say that generative content is still a derivative of human actions (and therefore subject to human error). But in the wrong hands, censorship, psyops, and fake expertise could be infinitely scaled on platforms that employ such tools with devious intentions or lacklustre oversight.

With the playful emergence of the ChatGPT tool, there is also a possibility to miss the forest for the trees. In 2021, Peter Thiel criticised Google’s collaborative work on AI with Chinese Universities, warning that the technology will be used to increase civilian surveillance. Since “everything in China is a civilian-military fusion”, bad-faith or compromised governments will use AI-powered facial recognition technology against dissenters and the population at large. The risk is for such tools to be adopted wholesale into Western democracies. For instance, during the pandemic, biometric iris data gathering at airports became normal in some countries, despite there being no precedent for it.

A centralised-decentralised axis

Researcher and publisher, Ben Goertzel, who has been working on AI since the late 1980s is credited with popularising the term “artificial general intelligence” or AGI. The term casts a wide net and highlights how an intelligent non-human agent can learn any task that a human being can. In other words, the catch-all phrase can mean everything and therefore nothing.

Still, Goertzel’s curiosity-driven approach to AI is in contrast to the current mainstream poster boy, Sam Altman, who is the CEO and co-founder of OpenAI’s ChatGPT. And herein lies the source for deep concern. Altman is also behind Worldcoin, a project that is intent on creating unique digital identities for global citizens by scanning their irises. Altman’s Worldcoin project harvests incredibly sensitive biometric data from populations in a self-described effort to create Universal Basic Income (UBI) under a global government. The implied relationship between biometric data gathering and generative AI paints a dystopian future which is in keeping with Thiel’s proposal that AI is ‘sort of communist’.

The profoundly uncharismatic idea is that once AI wipes out everyone’s job (it will likely augment jobs, and create new ones), a government or ‘public-private’ entity would only need to tax AI administrators at a high-enough rate to redistribute wealth to the plebeian masses. The eyeball-scan is supposedly intended to ensure that nobody defrauds this system by collecting multiple payments. To put it diplomatically, that doesn’t sound very convincing. And in my estimation, public-private partnerships are euphemisms for unbridled corruption, wherein governments represent themselves and stakeholders instead of the diverse interests of the population at large – best served by classically liberal ideas enshrined in Western constitutions.

Given what we know about Central Bank Digital Currencies, it’s not a stretch to say that there are simply too many red flags to count. This Silicon Valley UBI vision is a dystopian nightmare which combines the worst features of crypto (i.e. programmable tokens) with rebranded communism (i.e. a handful of organisations own everything you can think of, including your biometric data). It would be a future in which normal people are entirely dependent on the generosity of a few autocrats – a kind of new-age feudalism. A technocratic elite, running algorithms designed to harvest data on populations who simply don’t know any better because they’re locked in alternative reality bubbles.

Like all things, libertarian principles exists to the degree that people are willing to defend them. Decentralised monetary technologies empower individuals and businesses, while centralised technologies (in the wrong hands) incentivise a private-public cabal to chase a self-serving technocratic dystopia. While the dichotomy is by no means comprehensive, it highlights three fundamental points:

- Bitcoin, Litecoin and some cryptocurrencies are structurally different to artificial intelligence algorithms.

- These structural differences lend themselves to opposing values and visions of the future.

- Technology is a amoral and entirely dependent on human actors.